Jammify

Interactive light display system to promote impromptu digital art jamming

Augmented Human Lab, Auckland Bioengineering Institute, The University of Auckland: Sachith Muthukumarana (LK), Don Samitha Elvitigala (LK), Qin Wu (CN), Yun Suen Pai (MY), Suranga Nanayakkara (SG)

Project Description

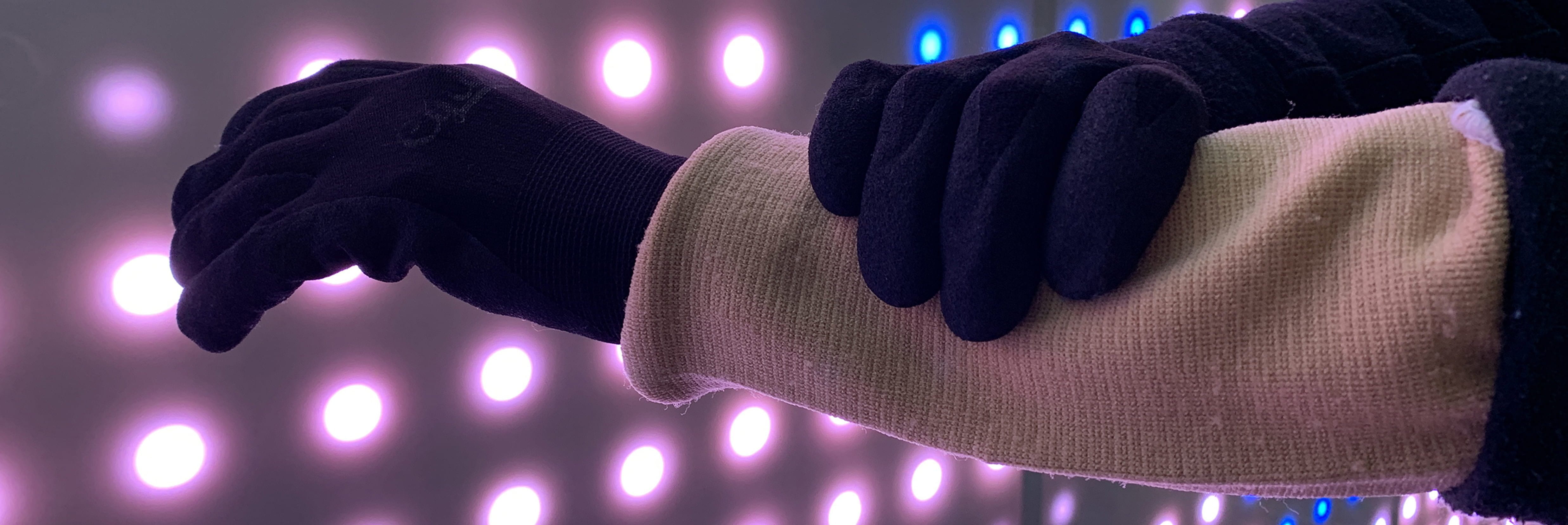

Jammify is motivated by providing a playful jamming exposure to busy, hard-working scholars at our institute. Jammify invites users to ‘play’ and engage in relaxed interactions, allowing them to take a ‘break’ from mentally demanding regular work. The current implementation of Jammify has a two-sided large-scale LED display and wearable forearm augmentation. Two individuals could draw on each side of the LED display as well as share emotive touch via the forearm sleeve that enables the recreation of subtle touch sensations on each other’s hands, creating a collective playful interaction. The entire canvas has 1800 individually addressable, full-color LED nodes. Each arm-sleeve has a sensing layer stack to sense and classify the touch gestures performed on the forearm using a force-sensitive resistor matrix, and an actuation layer stack to recreate the touch sensation of the skin by actuating a Shape-Memory-Alloy based plaster matrix.

Project Credits/Acknowledgements

This work was supported by the Assistive Augmentation research grant under the Entrepreneurial Universities (EU) initiative of New Zealand.

Website

Artist Bios

Sachith Muthukumarana (LK): Sachith is a PhD candidate at the Augmented Human Lab in Auckland Bioengineering Institute of the University of Auckland, New Zealand. While completing the undergraduate degree in Electronics and Telecommunication Engineering, he first joined the Augmented Human Lab as an intern. Then he started pursuing his PhD in Human-Computer Interaction.

Don Samitha Elvitigala (LK): Samitha is a final year Ph.D. candidate from Augmented Human Lab in Auckland Bioengineering Institute of the University of Auckland. He pursues his Ph.D. in Human-Computer Interaction; in particular, he explores novel wearable interfaces that can implicitly understand the physical and mental behaviors of humans. His research interests include HCI, Ubiquitous Computing, and Assistive Augmentation.

Qin Wu (CN): Qin is a designer from Augmented Human Lab in Auckland Bioengineering Institute of the University of Auckland. She received the M.F.A. degree in information arts and design from Tsinghua University, China, in 2016. Her research interests include human-computer interaction, user experience, and interaction design. She is also the Lab director and leads the Interactive Media Studio (imslab.design).

Yan Suen Pai (MY): Dr. Yun Suen Pai is currently a Postdoctoral Research Fellow at the Empathic Computing Laboratory, University of Auckland. His research interests include the effects of augmented, virtual and mixed reality towards human perception, behavior, and physiological state. He has also worked on haptics in AR, vision augmentation, VR navigation, and machine learning for novel input and interactions.

Suranga Nanayakkara (SG): Associate Professor Suranga Nanayakkara directs the Augmented Human Lab at Auckland Bioengineering Institute. His vision is to design technologies that learn and adapt to us instead of the other way around. For impact on society, originality and creativity he has won many awards including the young inventor under 35 (TR35 award) in the Asia Pacific region by MIT TechReview and INK Fellowship.